5 Finest Methods To Promote Deepseek

페이지 정보

작성자 Rico 댓글 0건 조회 15회 작성일 25-02-01 11:14본문

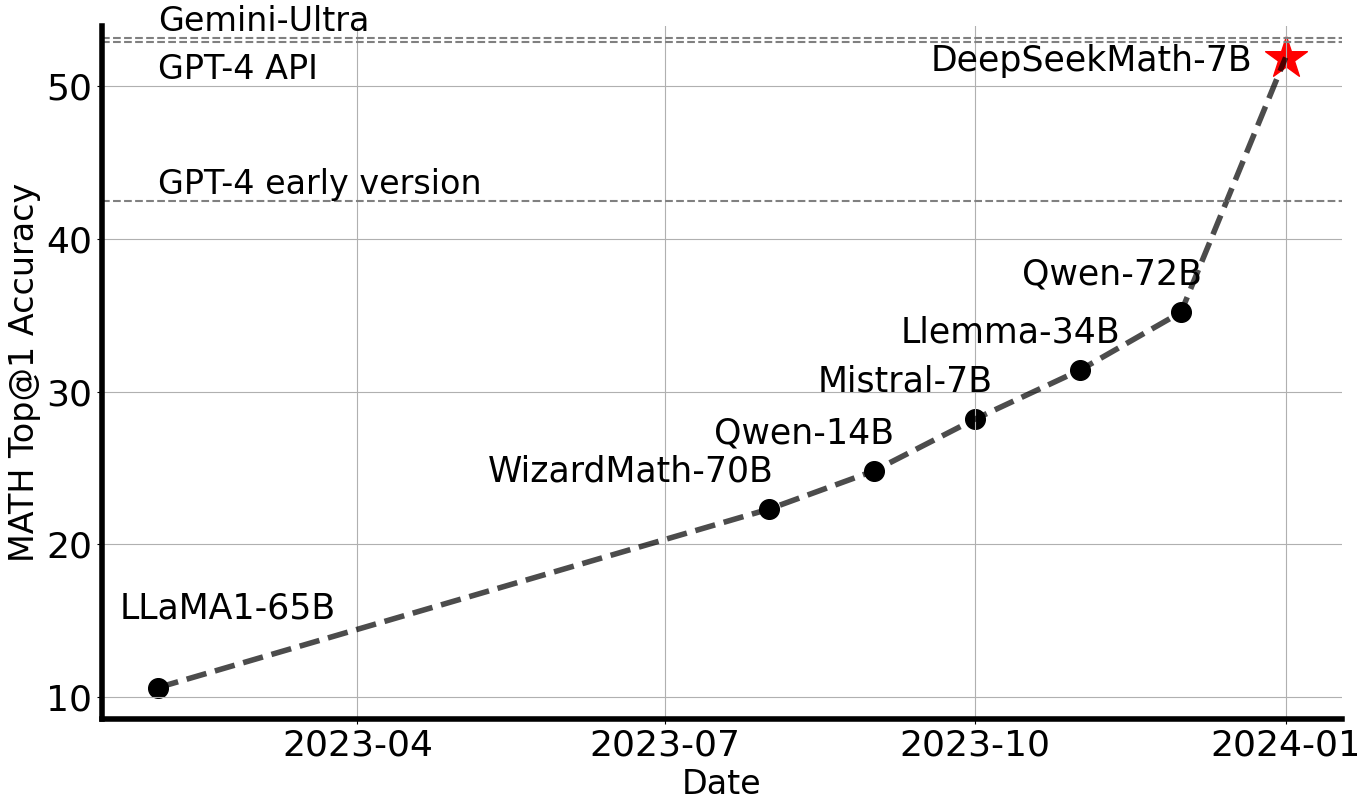

In response to DeepSeek’s inside benchmark testing, deepseek (why not try here) V3 outperforms both downloadable, "openly" accessible models and "closed" AI fashions that may only be accessed by way of an API. By improving code understanding, era, and modifying capabilities, the researchers have pushed the boundaries of what giant language fashions can obtain within the realm of programming and mathematical reasoning. The paper explores the potential of DeepSeek-Coder-V2 to push the boundaries of mathematical reasoning and code era for giant language models. DeepSeekMath: Pushing the boundaries of Mathematical Reasoning in Open Language and AutoCoder: Enhancing Code with Large Language Models are associated papers that explore related themes and developments in the sphere of code intelligence. These enhancements are vital as a result of they've the potential to push the boundaries of what massive language fashions can do in terms of mathematical reasoning and code-related duties. The researchers have additionally explored the potential of DeepSeek-Coder-V2 to push the bounds of mathematical reasoning and code era for large language models, as evidenced by the related papers DeepSeekMath: Pushing the bounds of Mathematical Reasoning in Open Language and AutoCoder: Enhancing Code with Large Language Models. Transparency and Interpretability: Enhancing the transparency and interpretability of the model's choice-making process may improve trust and facilitate better integration with human-led software program growth workflows.

While the paper presents promising outcomes, it is essential to think about the potential limitations and areas for further analysis, such as generalizability, moral issues, computational efficiency, and transparency. The researchers have developed a brand new AI system referred to as DeepSeek-Coder-V2 that aims to beat the limitations of current closed-source fashions in the sector of code intelligence. The paper presents a compelling strategy to addressing the limitations of closed-source models in code intelligence. This strategy ensures that the quantization process can higher accommodate outliers by adapting the size in accordance with smaller groups of components. Advancements in Code Understanding: The researchers have developed techniques to boost the model's capacity to understand and reason about code, enabling it to raised perceive the structure, semantics, and logical circulate of programming languages. Generalizability: While the experiments show sturdy performance on the tested benchmarks, it's essential to judge the model's capacity to generalize to a wider range of programming languages, coding styles, and actual-world eventualities.

While the paper presents promising outcomes, it is essential to think about the potential limitations and areas for further analysis, such as generalizability, moral issues, computational efficiency, and transparency. The researchers have developed a brand new AI system referred to as DeepSeek-Coder-V2 that aims to beat the limitations of current closed-source fashions in the sector of code intelligence. The paper presents a compelling strategy to addressing the limitations of closed-source models in code intelligence. This strategy ensures that the quantization process can higher accommodate outliers by adapting the size in accordance with smaller groups of components. Advancements in Code Understanding: The researchers have developed techniques to boost the model's capacity to understand and reason about code, enabling it to raised perceive the structure, semantics, and logical circulate of programming languages. Generalizability: While the experiments show sturdy performance on the tested benchmarks, it's essential to judge the model's capacity to generalize to a wider range of programming languages, coding styles, and actual-world eventualities.

These developments are showcased by way of a collection of experiments and benchmarks, which display the system's sturdy performance in various code-related duties. LLaVA-OneVision is the first open model to realize state-of-the-artwork efficiency in three essential computer imaginative and prescient eventualities: single-picture, multi-image, and video duties. First up is Meta-Llama-3.1-405B-Instruct. On the one hand, an MTP goal densifies the training signals and will enhance knowledge effectivity. Addressing the model's effectivity and scalability can be necessary for wider adoption and actual-world functions. Combining these efforts, we achieve excessive coaching efficiency. Massive Training Data: Trained from scratch fon 2T tokens, including 87% code and 13% linguistic information in both English and Chinese languages. This can be a Plain English Papers summary of a research paper called DeepSeek-Coder-V2: Breaking the Barrier of Closed-Source Models in Code Intelligence. Jordan Schneider: Alessio, I would like to come back back to one of many things you stated about this breakdown between having these research researchers and the engineers who are more on the system side doing the precise implementation. Both ChatGPT and DeepSeek enable you to click on to view the source of a selected advice, nevertheless, ChatGPT does a greater job of organizing all its sources to make them simpler to reference, and whenever you click on one it opens the Citations sidebar for easy accessibility.

As the sector of code intelligence continues to evolve, papers like this one will play a vital role in shaping the future of AI-powered tools for builders and researchers. I doubt that LLMs will substitute developers or make somebody a 10x developer. It's HTML, so I'll must make just a few modifications to the ingest script, together with downloading the web page and deep seek converting it to plain text. Please ensure you're using the most recent version of text-technology-webui. DeepSeek has been capable of develop LLMs rapidly by utilizing an innovative training process that depends on trial and error to self-enhance. Get started with CopilotKit utilizing the following command. I get an empty listing. If I'm building an AI app with code execution capabilities, corresponding to an AI tutor or AI information analyst, E2B's Code Interpreter shall be my go-to tool. They are not meant for mass public consumption (although you're free deepseek to learn/cite), as I will only be noting down data that I care about. A minor nit: neither the os nor json imports are used.

댓글목록

등록된 댓글이 없습니다.